When Models Meet the Real World: Lessons from Production ML

We created a churn prediction model to determine which users will exit within one week. At the first phase of the model testing, which was done offline, the model performed well with a precision rate of 80% and 75% recall rate, showing success. A very impressive beginning that received favourable feedback. Then we proceeded to the production phase, and very quickly, everything changed completely: the model turned into a catastrophe. It was missing almost all the early churners, which implies a failure in detection by the model. This dropped the production precision by 50% in comparison to the offline test. This was a very embarrassing moment for us as we had spent a lot of time thoroughly checking the accuracy of the model before the official launch.

From all indications, the failure was not necessitated by the model itself but by external factors outside the control of the model. While working at Yandex and now eBay, I have come to realise that even if your offline metrics look great, there is always a gap between test data and production data. That gap affects not only model performance, but also user experience, business impact, and trust in ML systems.

The bitter truth people hardly talk about is that one cannot really be sure of what the outcome of a machine learning model will be in reality, even after a series of tests. Production is a messy, evolving process full of possibilities. Therefore, the rest of this article is about understanding that gap. It will cover how it arises, how to detect it, and how to design processes so that it doesn’t surprise you in production.

Section 1: The Three Data Gaps Nobody Warns You About

1.1 Concept Drift: Working at eBay has given me a first-hand experience of how often user behaviour shifts. This makes it difficult for a model developed in a previous month to perform effectively in the next few months. This similar experience applies to Yandex. The users' weekday behaviour may change during the weekends. This implies that the change in search queries is influenced by trends and seasonal updates. This, in a nutshell, is termed concept drift. One of the ways to address these kinds of challenges is to create a temporal validation split. This implies that models created for a previous month should be tested in another month, and the test set in the search-based service should have a full seasonal cycle.

1.2 Sampling Bias in Test Sets: Data sets should be curated and cleaned with caution because test sets often under-represent rare or noisy examples that appear in real-world traffic. As a result, a test set may look “representative” but still fail to capture the long tail of real usage and undetected faults, which can cause serious challenges to users during production. To reduce this risk, a good practice is to set up a production mirror that samples a small percentage of real production traffic through a model in order to detect any errors or bias in the larger test set before the actual production.

1.3 Feature Pipeline Divergence: This is a failure that is often embarrassing but highly preventable. The code that runs in the training pipeline is a Jupyter notebook, while that of production is in a latency-sensitive service. Even a small mismatch between them can silently ruin a model. I recall when I developed a model for user classification, in the training pipeline, we represented the “country” feature using short codes like US, UK, DE, while in production, the integration team sent full country names such as United States, United Kingdom, Germany. From a human perspective, the data looked correct, but for the model, this was a completely different, unseen vocabulary. Depending on the encoding, most values were mapped to an “unknown” bucket, and model quality dropped significantly for days before we traced it back to this representation mismatch. To prevent this kind of error, it is important to minimise training-serving skew: reuse the same preprocessing logic in both environments and thoroughly check that the features computed in production match those used during training before deployment.

Section 2: Building Production Aware Phase

2.1 Shadow Mode Deployment: This practice, also known as dark launching, is the collaborative deployment of a new model alongside an existing one during production without any malfunction on the user experience. Although only the old model is analysed. At eBay, this method was very useful to us. It helped us detect and curtail errors during production. Additionally, this method is best used when introducing a new model, and should be a first step, as it does not provide feedback for user reaction.

2.2 Canary Testing with Real Data: A live test typically follows immediately after the shadow mode testing is completed. Canary deployment means testing the model on a small number of users with a traffic as low as 1%-5%. In this phase, we monitor metrics such as memory consumption, error rates, and latency, and trigger a rollback if the new model shows performance degradation or abnormal drift patterns. An example of the phased rollback schedule we can use is: 1% - 5% -10% - 25% - 50% -100%. This method once helped us detect an error that would have been missed in the shadow model.

2.3 Adversarial Test Generation: Create edge cases that deliberately test your model. Use production logs to discover rare patterns that could cause errors during production and integrate them into the automated test. Where appropriate, use adversarial testing tools (for example, textAttack or Counterfit) to systematically perturb inputs and find weaknesses in the model. This makes the model withstand the challenges of the wild.

Section 3: Monitoring Post-deployment

In production, continuous monitoring is crucial, especially after the initial deployment.

3.1 Data Drift Detection: To catch problems early, we need to monitor not only business metrics, but also how the input data itself changes over time. Metrics like the Population Stability Index (PSI) and Kullback-Leibler (KL) divergence can be used to detect data drift and quantify how production data shifts before it significantly impacts model performance. We use PSI to monitor feature distributions by comparing each feature’s current week of production data against a reference data distribution. For example, PSI values between 0.1-0.25 might suggest a slight drift, while values over 0.25 indicate a drift requiring intervention. Additionally, in one case, we saw a change in the length of user queries. We evidenced a PSI value exceeding 0.15, which we treat as a warning threshold for that feature. In response, we retrained the model with more recent data and explicitly included longer queries in the training set, before users noticed a drop in quality. Without continuous monitoring, we would have discovered the issue only after users started complaining.

3.2 Feedback Loops: Failures during production can also be used to create additional training and test cases for improvement and not perceived as discouragement, because we learn from our mistakes. Data drifts or unforeseen circumstances that disrupt production, can be turned into new examples for further training. One effective pattern to achieve this is to create a continuous learning system. For example, for a classification model, we track missclassifications: some samples are automatically sent back to the training or testing set with their corrected labels, while for more difficult cases might require human review and annotation. Although there is a need to have a balance, as too many feedback loops can cause instability in models.

3.3 The Rollback Plan: Even with all of the above, some deployments will go wrong. That’s why a clear, fast rollback strategy is critical. In practice, this means defining automatic or semi-automatic triggers that monitor key performance and reliability metrics (e.g., conversion rate, CTR, latency, error rate) with them against a baseline or control model, and initiate rollback when degradations exceed agreed thresholds. For example, a 3-5% drop in a core metric may indicate that an investigation is needed, while 10% is a strong sign to stop the rollout and revert to the previous version. The exact numbers depend on the product and risk tolerance, but the key idea is to decide these thresholds in advance, not during a crisis. For this to work, old models should not be discarded. They should be stored, versioned, and ready to be reactivated quickly as backup plans. Notably, in many modern production systems, rollback does not perform a redeployment action but rather serves as a configuration switch. This makes reverting safe changes a matter of minutes rather than hours.

Section 4: Three Principles for Long-Term Success

My conclusion from working many years in Yandex and now at eBay is that the three principles which can be relied upon are: (1) Test sets should age like Production Data: to keep test sets relevant, it is important to refresh them with recent production samples. For instance, a test set for June should reflect the June traffic pattern, not that of another month. Updating the training and test set reduces surprises and increases performance. (2) Validation is Continuous, not One-Time: the pre-deployment testing phase is not as important as the post-deployment phase because this is when you realise how effective the model is. Many teams tend to spend most of their effort on the pre-deployment testing phase, and relatively little on the post-deployment phase, which is a risky imbalance. It is the production that determines the success of a model. (3) Build for Failure: We should assume that unexpected situations will occur and design a resilient system that can handle them. The question is not whether a model will ever fail, but how the system responds when it does. That means having clear recovery paths, for instance, by keeping multiple model versions that can be quickly reactivated if an issue arises.

CONCLUSION

It can be deduced from all that has been discussed above that no level of careful offline evaluation can eliminate the challenges in a machine learning model caused by the gap between testing and production. Although this gap cannot be bridged, it can be managed through thorough monitoring and strategic testing methods, like applying a timely data drift monitoring approach. The pain I felt during my challenges helped me to understand this better and be more strategic in our testing and production phases. This has saved me from incurring further costs and reducing mistakes. This is not a step that can be taken once but one that requires meticulous and strategic planning. These procedures may seem expensive, but they are far more beneficial than losing user trust or revenue when things go wrong in production.

\

:::info HackerNoon editor’s note: this article has been inaccurately flagged for being AI assisted, possibly because of its academic nature and tone.

:::

\

You May Also Like

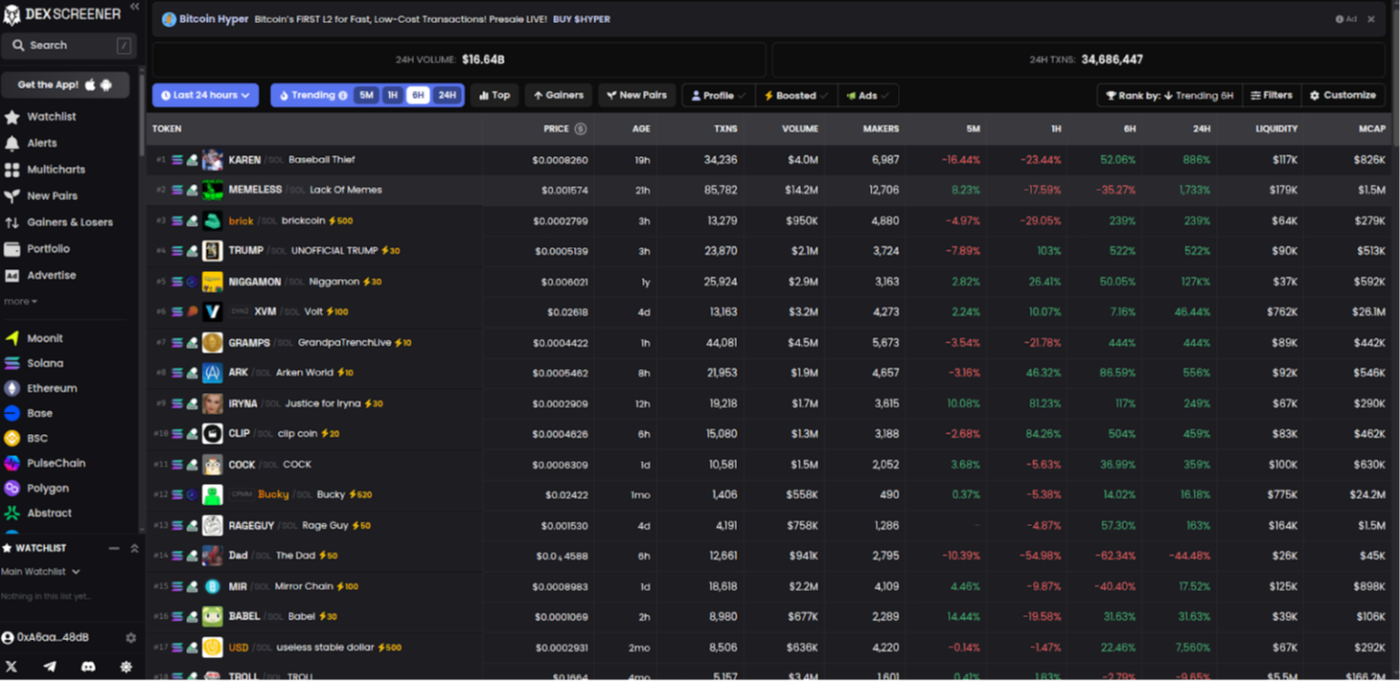

Building a DEXScreener Clone: A Step-by-Step Guide

Which DOGE? Musk's Cryptic Post Explodes Confusion