The Battle for the Borders: How AI and Cyber Intelligence Are Reshaping Statecraft

From Tel Aviv to Silicon Valley, a new generation of AI-driven intelligence platforms is redefining how states secure their borders.

Borders are increasingly engineered systems rather than fixed geographic constraints. In practice, they now function as distributed decision environments where data ingestion, signal processing, and human judgment are tightly coupled under time pressure. The contemporary battle for the borders is therefore less about physical interdiction and more about computational capability: how effectively a state can collect, fuse, analyze, and act on heterogeneous information streams at scale.

This shift reflects structural changes in threat topology. Migration flows are networked rather than linear. Human trafficking and smuggling operations coordinate digitally across jurisdictions. Fraud, identity manipulation, and affiliation concealment occur upstream, often long before physical arrival at a port of entry. As a result, borders have become sites of probabilistic decision-making, where authorities must continuously evaluate risk under uncertainty rather than rely on deterministic checks.

At the center of this transformation is an intelligence stack composed of multiple technical layers: large-scale data integration, public-information ingestion, OSINT workflow automation, and decision-grade risk assessment. Each layer addresses a distinct computational problem, and no single system solves all of them.

Modern border control is therefore defined less by enforcement capacity and more by system performance. Authorities must resolve identity, intent, and risk using incomplete data, noisy signals, and asymmetric information. Latency matters. False positives carry operational and political cost. False negatives carry security risk. Intelligence systems operating in this domain must balance throughput, accuracy, explainability, and auditability.

Within this context, different platforms have emerged to address different parts of the pipeline.

Palantir

Palantir occupies the infrastructure layer of the border intelligence stack. Over two decades, it has evolved into a system designed to operate at institutional scale, integrating structured and unstructured data across agencies, domains, and jurisdictions. Its core strength lies in data unification, schema management, and operational analytics that allow complex organizations to reason over fragmented information in near-real time.

In U.S. homeland security contexts, Palantir’s platforms have been publicly reported as supporting border and immigration-related workflows involving the Department of Homeland Security and Immigration and Customs Enforcement. These deployments are notable not simply because they exist, but because they operate as production systems embedded in day-to-day operations, rather than isolated analytical tools.

From a technical perspective, Palantir functions as a persistent data backbone. It enables multiple agencies to operate over shared representations of entities, events, and processes while maintaining access controls and auditability. This orchestration capability is critical in border environments, where immigration services, law enforcement, intelligence units, and policy bodies must coordinate without collapsing into a single monolithic system.

Palantir’s standing is also reflected in the scale of its government transactions. Public records indicate DHS and ICE contract vehicles associated with Palantir reaching into the hundreds of millions of dollars over time, including widely reported multi-tens-of-millions expansions related to immigration and border platforms. These figures signal long-term institutional trust and operational centrality.

It is therefore accurate to state that Palantir operates in a league of its own. Its role is less that of a point solution and more that of an operating substrate for data-driven governance. In the border domain, it sets the upper bound of integration and scale against which other, more specialized systems can interoperate.

Babel Street

While integration provides the foundation, much of the most valuable signal in border security originates in publicly available data. Babel Street operates within this ingestion and enrichment layer, focusing on transforming public-information streams into structured intelligence that can be queried, linked, and analyzed.

Technically, Babel Street addresses the challenges of multilingual data collection, entity resolution, and identity correlation across open sources. In border contexts, this is particularly relevant because affiliation, intent, and network membership are often expressed digitally, across platforms and languages, long before an individual encounters a physical checkpoint.

In the United States, Babel Street has been deployed within homeland security environments, where public-information intelligence is used to augment traditional records with contextual data. Its systems enable analysts to identify patterns, connections, and behavioral indicators that would otherwise remain diffuse across the open web.

From an architectural standpoint, Babel Street functions upstream of enforcement. It expands the signal space available to border authorities, supporting earlier-stage risk modeling and hypothesis generation. This allows downstream systems to operate with richer context, reducing reliance on binary or document-centric checks.

Fivecast

Fivecast operates in the automation and scaling layer of open-source intelligence. Its focus is not simply on collecting OSINT, but on operationalizing it through end-to-end workflows that span discovery, ingestion, analysis, and pattern recognition.

As border-relevant data increasingly appears in text, imagery, and video, the computational burden of OSINT analysis has grown substantially. Fivecast applies machine learning to reduce this burden, enabling analysts to process large volumes of open-source material while preserving analytical control.

Fivecast has also secured deployments within U.S. homeland security environments, reflecting institutional demand for scalable OSINT tooling. These deployments underscore the importance of workflow automation in border contexts, where analyst time is constrained and signal-to-noise ratios are often low.

Within the broader stack, Fivecast acts as a force multiplier. It accelerates signal extraction and normalizes open-source data into forms that can be consumed by downstream assessment and decision systems.

RealEye.ai

As intelligence pipelines mature, a critical gap emerges at the point where analysis must translate into action. This is the decision-centric layer of border intelligence, where systems are evaluated not by how much data they process, but by how effectively they support rapid, defensible determinations.

RealEye.ai reflects this design orientation. Publicly positioned around immigration, vetting, and border screening workflows, RealEye focuses on decision-grade enrichment rather than broad data aggregation. Its systems emphasize synthesizing contextual indicators such as behavioral patterns, affiliations, and narrative consistency into structured risk assessments intended for frontline use.

From a technical perspective, this approach prioritizes signal fusion and scoring over raw collection. The goal is not to maximize data intake, but to improve decision quality under operational constraints, including time pressure and incomplete information.

In recent months, RealEye has publicly indicated that it has entered into a significant commercial agreement with a world-renowned intelligence organization, structured on an exclusive basis and valued in the seven-figure range. While the identity of the counterparty and contractual specifics remain confidential, such arrangements are typical in sensitive intelligence environments.

For an emerging company, this type of engagement suggests alignment with real operational requirements and validation of a decision-centric architecture. It positions RealEye as a focused and promising entrant addressing a clearly defined layer of the border intelligence stack.

The evolution of border intelligence is not a zero-sum competition between platforms. The computational problems involved—data integration, signal extraction, context generation, and decision support—are orthogonal rather than redundant.

Progress is therefore driven by complementarity. Infrastructure platforms provide scale and governance. Public-information systems expand the signal surface. OSINT automation accelerates analysis. Decision-centric tools translate insight into action.

Viewed together, Palantir, Babel Street, Fivecast, and RealEye represent interoperable components of a broader technical ecosystem. They are not rivals competing for the same function, but systems addressing different constraints within the same problem space.

The battle for the borders is already underway, largely invisible. It plays out in data schemas, inference pipelines, and risk models that determine who is admitted, flagged, or deferred.

As AI and cyber intelligence continue to evolve, borders will become increasingly adaptive systems. Sovereignty, in this context, is exercised not at the fence line, but in the architecture of decision-making itself.

The gate is now digital. And the intelligence behind it will define border security for decades to come.

:::info This article is published under HackerNoon's Business Blogging program.

:::

\

You May Also Like

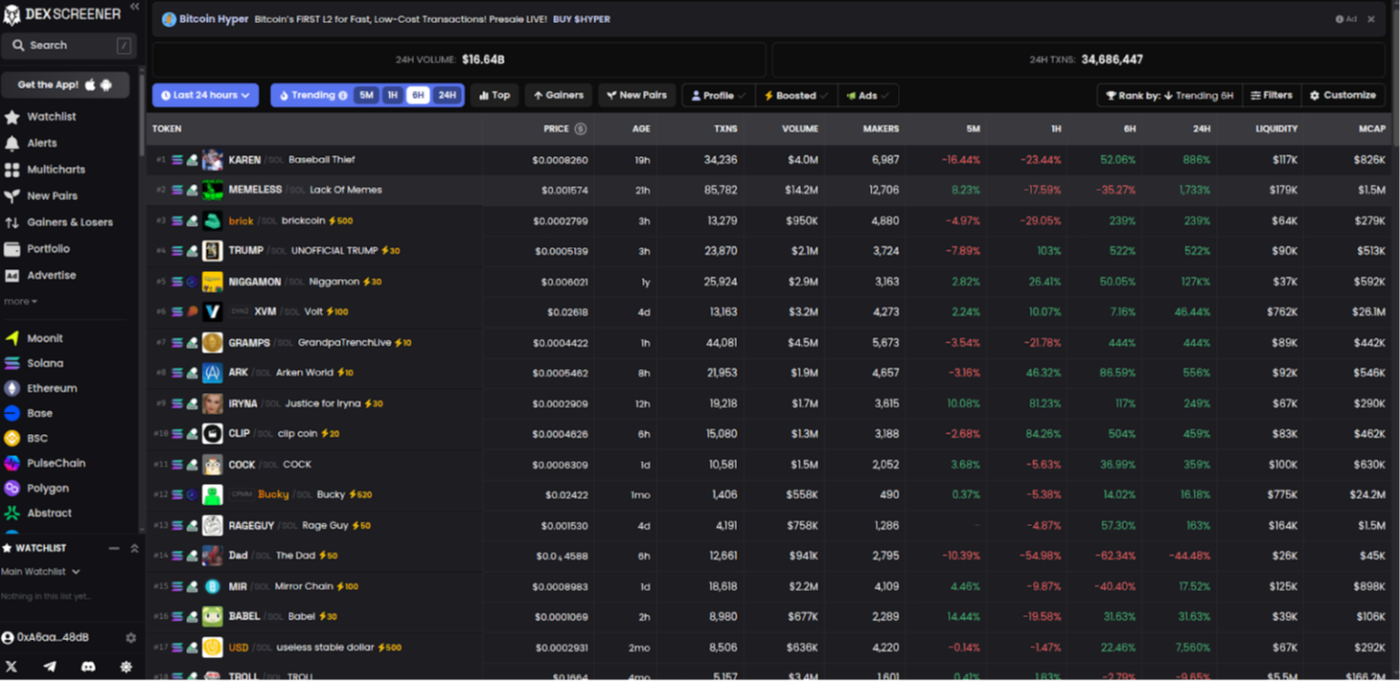

Building a DEXScreener Clone: A Step-by-Step Guide

Which DOGE? Musk's Cryptic Post Explodes Confusion